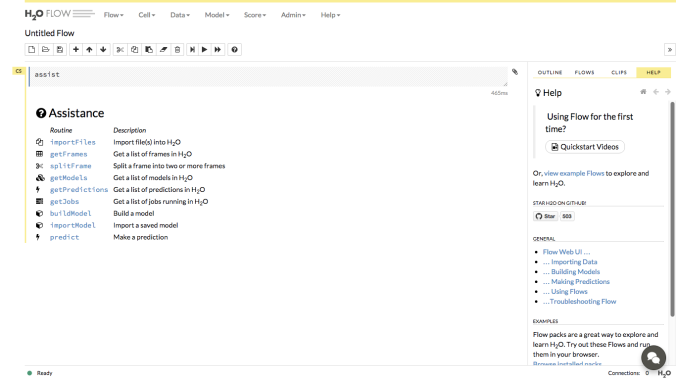

When you start learning programming, it is recommended to visit the sites of community of languages. “R” and “python” have big communities, and they have been contributing to the progress of each language. This is good for all users. H2O. ai also held an annual community conference “H2O WORLD 2015” this month. Now video and presentation slides are available through the internet. I could not attend the conference as it was held in Silicon Valley in the US. But I can follow and enjoy it just by going through websites. I recommend you to have a quick look to understand how knowledge and experiences can be shared at the conference. It is good for anyone who are interested in data analysis.

1. The user communities can accelerate the progress of open source languages

When I started learning “MATLAB®” in 2001, there were few user communities in Japan as far as I knew. So I should attend the paid seminars to learn this language, which were not cheap. But now most of uses communities are available without any fee. In addition to that, this kind of communities have been bigger and bigger recently. One of the main reasons is that number of “open source languages” are increasing recently. “R” and “python” are also open source languages. It means that when someone want to try certain language, all they have to do is just “download and use it”. Therefore, users can be increased at an astonishing pace. On the other hand, if someone want to try “proprietary software” such as MATLAB, they must buy each license before using it. I loved MATLAB for many years and recommended my friends to use it. But unfortunately no one uses it privately because it is difficult to pay license fee privately. I imagine that most users of proprietary software are in organizations such as companies and universities. In such case, organizations pay license fees. So each individual can enjoy no freedom to choose languages they want to use. Generally it is difficult to switch from one language to another when proprietary softwares are used. It is called “Vendor lock-in“. Open source languages can avoid that. This is one of the reasons why I love open source languages now. The more people can use, the more progress can be achieved. New technologies such as “machine learning” can be developed thought user communities because more users will join going forward.

2. The real industry experiences can be shared in communities

It is the most exciting part of the community. As a lot of data scientists and engineers from industry join communities, their knowledge and experience are shared frequently. It is difficult to find this kind of information in other places. For example, the theory of algorithms and methods of programming can be found in the courses provided by universities in MOOCs. But there are few about industry experiences in MOOCs in a real time basis. For example, in H2O WORLD 2015, there are sessions with many professionals and CEOs from industries. They share their knowledge and experiences there. It is a treasure not only for experts of data analysis, but for business personnel who are interested in data analysis. I would like to share my own experience in user communities in future.

3. Big companies are supporting uses communities

Recently major IT big companies have noticed the importance of the user community and try to support them. For example, Microsoft supports “R Consortium” as a platinum member. Google and Facebook support communities of their open source languages, such as “TensorFlow” and “Torch“. Because new things are likely to happen and be developed among users outside the companies. Therefore It is also beneficial to big IT companies when they support user communities. Many other IT companies are supporting communities, too. You can find many names as sponsors under the big conference of user communities.

The next big conference of user communities is “useR! – International R User Conference 2016“. It will be held on June 2016. Why don’t you join us? You may find a lof of things there. It must be exciting!

Note: Toshifumi Kuga’s opinions and analyses are personal views and are intended to be for informational purposes and general interest only and should not be construed as individual investment advice or solicitation to buy, sell or hold any security or to adopt any investment strategy. The information in this article is rendered as at publication date and may change without notice and it is not intended as a complete analysis of every material fact regarding any country, region market or investment.

Data from third-party sources may have been used in the preparation of this material and I, Author of the article has not independently verified, validated such data. I and TOSHI STATS.SDN.BHD. accept no liability whatsoever for any loss arising from the use of this information and relies upon the comments, opinions and analyses in the material is at the sole discretion of the user.