As the new year starts, I would like to set up a new project of my company. This is beneficial not only for my company, but also readers of the article because this project will provide good examples of predictive analytics and implementation of new tools as well as platforms. The new project is called “Deep Learning project” because “Deep Learning” is used as a core calculation engine in the project. Through the project, I would like to create “predictive analytics environment”. Let me explain the details.

1.What is the goal of the project?

There are three goals of the project.

- Obtain knowledge and expertise of predictive analytics

- Obtain solutions for data-driven management

- Obtain basic knowledge of Deep Learning

As big data are available more and more, we need to know how to consume big data to get insight from them so that we can make better business decisions. Predictive analytics is a key for data-driven management as it can make predictions “What comes next?” based on data. I hope you can obtain expertise of predictive analytics by reading my articles about the project. I believe it is good and important for us as we are in the digital economy now and in future.

2.Why is “Deep Learning” used in the project?

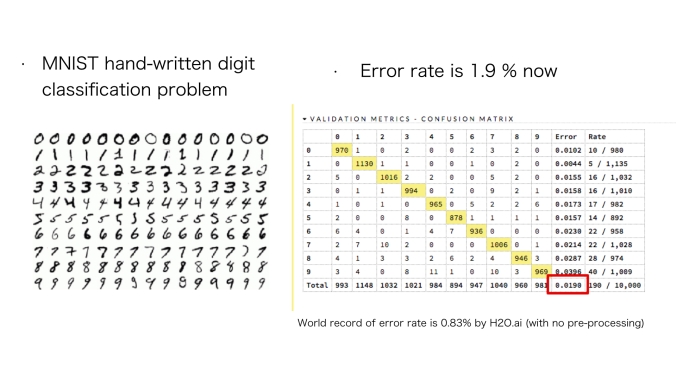

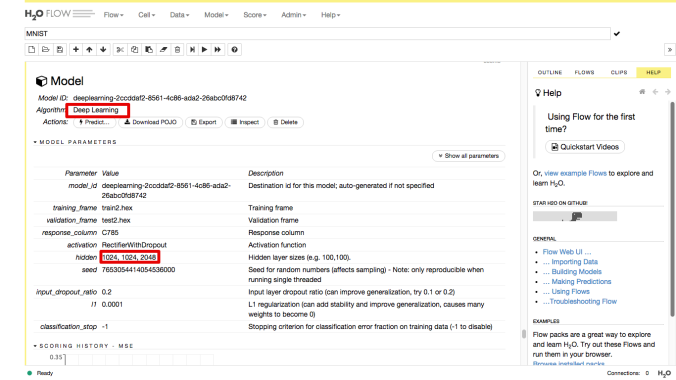

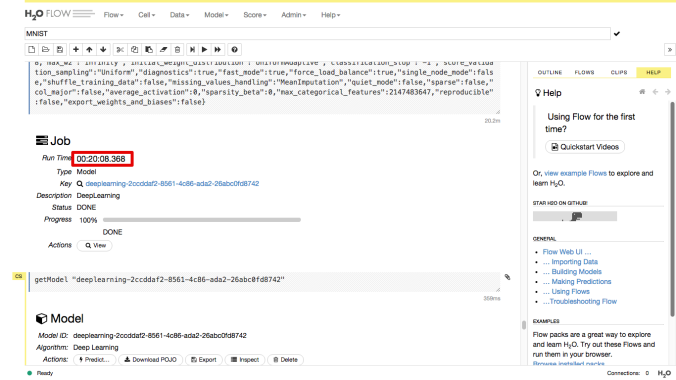

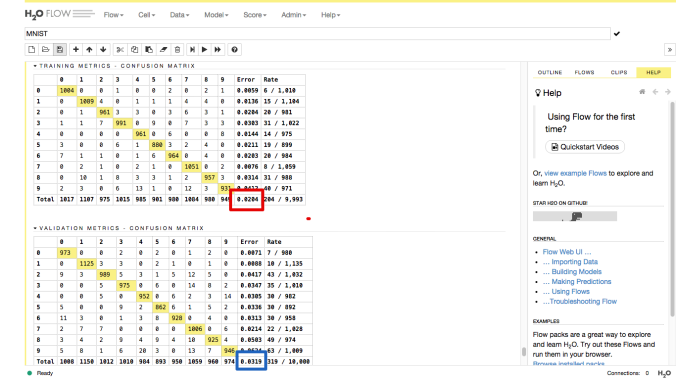

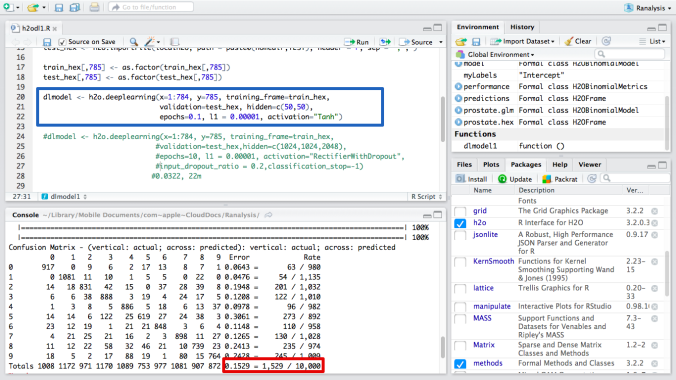

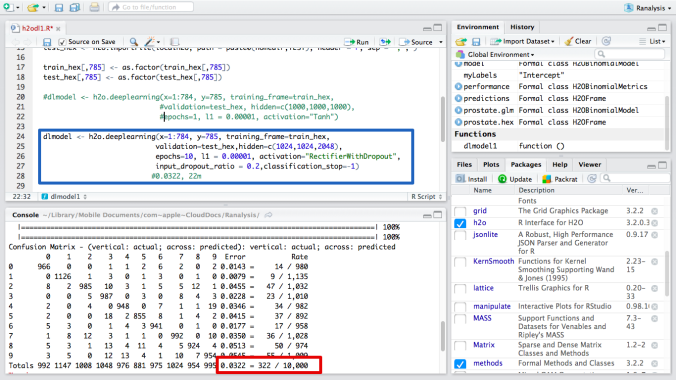

Since the November last year, I tried “Deep Learning” many times to perform predictive analytics. I found that it is very accurate. It is sometimes said that It requires too much time to solve problems. But in my case, I can solve many problems within 3 hours. I consider “Deep Learning” can solve the problems within a reasonable time. In the project I would like to develop the skills of tuning parameters in an effective manner as “Deep Learning” requires several parameters setting such as the number of hidden layers. I would like to focus on how number of layers, number of neurons, activate functions, regularization, drop-out can be set according to datasets. I think they are key to develop predictive models with good accuracy. I have challenged MNIST hand-written digit classifications and our error rate has been improved to 1.9%. This is done by H2O, an awesome analytic tool, and MAC Air11 which is just a normal laptop PC. I would like to set my cluster on AWS in order to improve our error rate more. “Spark” is one of the candidates to set up a cluster. It is an open source.

3. What businesses can benefit from introducing “Deep Learning “?

“Deep Learning ” is very flexible. Therefore, it can be applied to many problems cross industries. Healthcare, financial, retails, travels, food and beverage might be benefit from introducing “Deep Learning “. Governments could benefit, too. In the project, I would like to focus these areas as follows.

- Digital marketing

- Economic analysis

I would like to create a database to store the data to be analyzed, first. Once it is created, I perform predictive analytics on “Digital marketing” and “Economic analysis”. Best practices will be shared with you to reach our goal “Obtain knowledge and expertise of predictive analytics” here. Deep Learning is relatively new to apply both of the problems. So I expect new insight will be obtained. For digital marketing, I would like to focus on social media and measurement of effectiveness of digital marketing strategies. “Natural language processing” has been developed recently at astonishing speed. So I believe there could be a good way to analyze text data. If you have any suggestions on predictive analytics in digital marketing, could you let me know? It is always welcome!

I use open source software to create an environment of predictive analytics. Therefore, it is very easy for you to create a similar environment on your system/cloud. I believe open source is a key to develop superior predictive models as everyone can participate in the project. You do not need to pay any fee to introduce tools which are used in the project as they are open source. Ownership of the problems should be ours, rather than software vendors. Why don’t you join us and enjoy it! If you want to receive update the project, could you sing up here?

Notice: TOSHI STATS SDN. BHD. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithm or ideas contained herein, or acting or refraining from acting as a result of such use. TOSHI STATS SDN. BHD. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on TOSHI STATS SDN. BHD. and me to correct any errors or defects in the codes and the software.