Hello, I am Toshi. Hope you are doing well. Now I consider how we can apply data analysis to our daily businesses. So I would like to introduce “classification” to you.

If you are working in marketing/sales departments, you want to know who are likely to buy your products and services. If you are in legal services, you would like to know who wins the case in a court. If you are in financial industries, you would like to know who will be in default among your loan customers.

These cases are considered as same problems as “classfication”. It means that you can classify a thing or an event you are interested in from all populations you have on hand. If you have data about who bought your products and services in the past, we can apply “classification” to predict who are likely to buy and make better business decisions. Based on the results of classification, you can know who is likely to win cases and who will be in default with a numerical measure of certainty, which is called “probability”. Of course, “classification” can not be a fortune teller. But “classification” can provide us who is likely to do something or what is likely to occur with some probabilities. If your customer has 90% of probabilities based on “classification”, it means that they are highly likely to buy your products and services.

I would like to tell several examples of “classification” for each business. You may want to know the clues about the questions below.

- For the sales/marketing personnel

What is the movie/music in the Top 10 ranking in the future?

- For personnel in the legal services

Who wins the cases ?

- For personnel in the financial industries or accounting firms

Who will be in default in future?

- For personnel in healthcare industries

Who is likely to have a disease or cure diseases?

- For personnel in asset management marketing

Who is rich enough to promote investments?

- For personnel in sports industries

Which team wins the world series in baseball?

- For engineers

Why was the spaceship engine exploded in the air?

We can consider a lot of examples more as long as data is available. When we try to solve these problems above, we need data in the past, including the target variable, such as who bought products, who won the cases and who was default in the past. Without data in the past, we can predict nothing. So data is critically important for “classification” to make better business decisions. I think data is “King”.

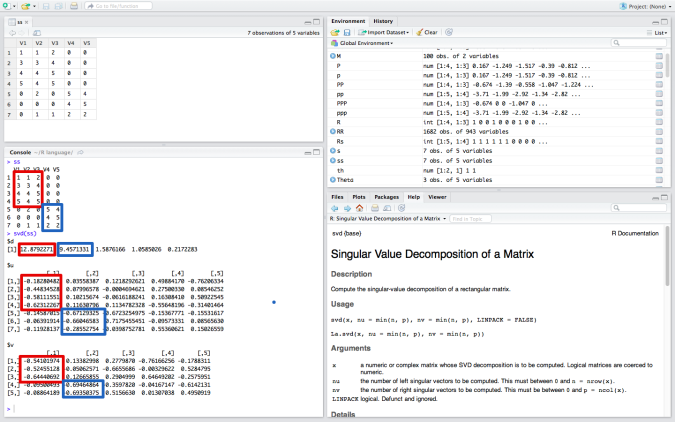

Technically, several methods are used in classification. Logistic regression, Decision trees, Support Vector Machine and Neural network and so on. I recommend to learn Logistic regression first as it is simple, easy to apply real problems and can be basic knowledge to learn more complex methods such as neural network.

I would like to explain how classification works in the coming weeks. Do not miss it! See you next week!