Hi friends. I am Toshi. Today I update the weekly letter. This week’s topic is about my challenge. Last Saturday and Sunday I challenged the competition of data analysis in the platform called “Kaggle“. Have you heard of that? Let us find out what the platform is and how good it is for us.

This is the welcome page of Kaggle. We can participate in many challenges without any fee. In some competitions, the prize is awarded to a winner. First, data are provided to be analyzed after registration of competitions. Based on the data, we should create our models to predict unknown results. Once you submit the result of your predictions, Kaggle returns your score and ranking in all participants.

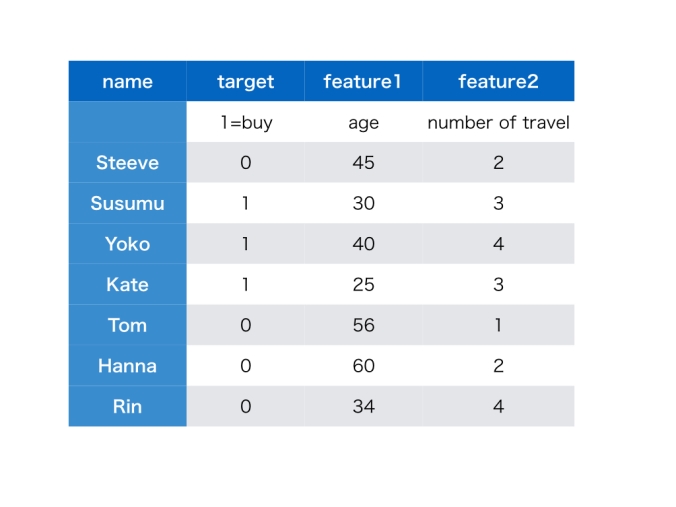

In the competition I participated in, I should predict what kind of news articles will be popular in the future. So “target” is “popular” or “not popular”. You may already know it is “classification” problem because “target” is “do” or “not do” type. So I decided to use “logistic curve” to predict, which I explained before. I always use “R” as a tool for data analysis.

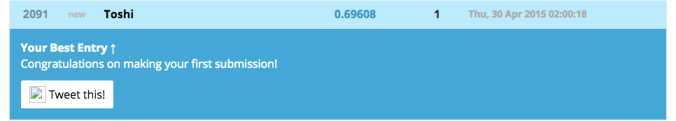

This is the first try of my challenge, I created a very simple model with only one “feature”. The performance is just average. I should improve my model to predict the results more correctly.

Then I modified some data from characters to factors and added more features to be input. Then I could improve performance significantly. The score is getting better from 0.69608 to 0.89563.

In the final assessment, the data for predictions are different from the data used in interim assessments. My final score was 0.85157. Unfortunately, I could not reach 0.9. I should have tried other methods of classification, such as random forest in order to improve the score. But anyway this is like a game as every time I submit the result, I can obtain the score. It is very exciting when the score is getting improved!

![]()

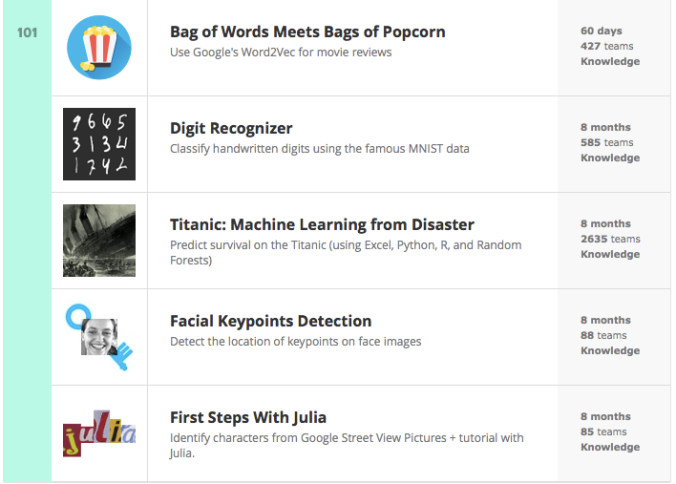

This list of competitions below is for the beginners. Everyone can challenge the problems below after you sign off. I like “Titanic”. In this challenge we should predict who could survive in the disaster. Can we know who is likely to survive based on data, such as where customers stayed in the ship? This is also “classification”problem. Because the “target” is “survive”or “not survive”.

You may not be interested in data-scientists itself. But it is worth challenging these competitions for everyone because most of business managers have opportunities to discuss data analysis with data-scientists in the digital economy. If you know how data is analyzed in advance, you can communicate with data-scientists smoothly and effectively. It enables us to obtain what we want from data in order to make better business decisions. With this challenge I could learn a lot. Now it’s your turn!