I started using deep learning four years ago. I have countless experience of deep learning before. I thought I knew the most part of deep learning. But I found I was wrong when I tried new computational engine called “TPU” today. I want to share my experience as it is beneficial for everyone who is interested in artificial intelligence. Let us start.

1. TPU is more than 10X faster

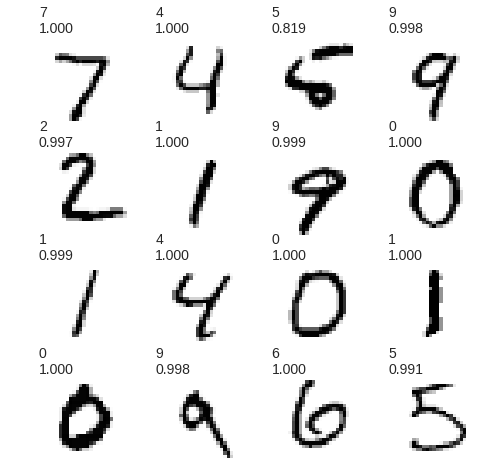

Deep learning is one of the most powerful algorithms in artificial intelligence. Google uses it in many products such as Google translation. Problem is that deep learning needs a massive amount of computational power. For example, let us develop a classifier to tell what it is from 0 to 9. This is a dataset of MNIST, which is “hello world” in deep learning. I want to classify each of them automatically by computers.

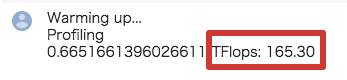

Two years ago, I did it on my Mac Air11. It took around 80 minutes to complete training. MNIST dataset is one of the simplest training data in computer vision. So if I want to develop a more complex system, such as a self-driving car, my Mac Air is useless as it takes far longer for calculation. Fortunately, I can try TPU, which is a specialized processor for deep leaning. Then I found it is incredibly fast as it completes the training less that one minute! 80 minutes vs 1 minute. I tried many times but the result is always the same. So I check the speed of calculation. It says more than 160 TFLOPS. TPU is faster than super-computers in 2005. TPU is the fastest processor I have ever tried before. This is amazing.

2. TPU is easy to use!

Although TPU is super fast, it should be easier to use. If you need to rewrite your code when you use TPU, you may hesitate to use it. If you use “Tensorflow’, open source deep learning framework by Google, there is no problem. Just small modifications are needed. If you use other frameworks, you need to wait until TPU supports other frameworks. I am not sure when it happens. In my case, I mainly use tf.keras on Tensorflow so no need to worry about. you can see the codes of my experiment here.

3. TPU is available on colab for free!

Now we find TPU is fast and easy to use. Then I need inexpensive tools in my business. But you do not worry about. TPU is provided from Google colab, web-based- experiment- environment for free. Although there are some limitations (such as maximum-time is 12 hours), I think it is OK to develop minimum viable models. If you need TPU on formal projects, paid service is also provided by Google. So we can use it as free-services or paid services, depends on our needs. I recommend you to use TPU on colab as free-services to learn how TPU works.

Technically this TPU is v2. Google already announced TPU v3, more powerful TPU. So these services might be more powerful in near future. Today’s experience is just a beginning of the story. Do you want to try TPU?

1). MNIST with tf.Keras and TPUs on colab

Notice: Toshi Stats Co., Ltd. and I do not accept any responsibility or liability for loss or damage occasioned to any person or property through using materials, instructions, methods, algorithm or ideas contained herein, or acting or refraining from acting as a result of such use. Toshi Stats Co., Ltd. and I expressly disclaim all implied warranties, including merchantability or fitness for any particular purpose. There will be no duty on Toshi Stats Co., Ltd. and me to correct any errors or defects in the codes and the software